We have already covered how a dynamic look up works the same can be found out from the following link

http://dwbuddy.blogspot.com/2014/06/dynamic-look-up-in-informatica.html So let's see how we can use it in a regular SCD type 2 mapping.

The logic used in SCD type 2 is same as discussed in earlier blog i.e. http://dwbuddy.blogspot.com/2015/01/scd-type-ii-implementation-in.html. The only difference being here is we have used dynamic look up instead of a static one. We go for dynamic look up when there are duplicate entries for a PK value and only the latest record present in the file should be termed as active.

Let us consider the following sample data

So for EMP_ID 101 we have two records in the same day flow so the latest record i.e. with salary as 1500 should be termed as Active record.

The mapping will look in the following way

http://dwbuddy.blogspot.com/2014/06/dynamic-look-up-in-informatica.html So let's see how we can use it in a regular SCD type 2 mapping.

The logic used in SCD type 2 is same as discussed in earlier blog i.e. http://dwbuddy.blogspot.com/2015/01/scd-type-ii-implementation-in.html. The only difference being here is we have used dynamic look up instead of a static one. We go for dynamic look up when there are duplicate entries for a PK value and only the latest record present in the file should be termed as active.

Let us consider the following sample data

So for EMP_ID 101 we have two records in the same day flow so the latest record i.e. with salary as 1500 should be termed as Active record.

The mapping will look in the following way

So in here we have a dynamic lookup couple of expression transformations,a transaction control transformation which is used to check if there are more than one file is coming from source, a router to split records and an update strategy to update records.We have 3 targets in our flow TGT1 is for inserts, TGT2 is for updates and TGT3 is for reinserting of an exiting record with the latest value.

We create lookup on the target table (Employee) .We use both the columns EMP_ID and DEPT_ID for lookup condition.The properties are set as follows

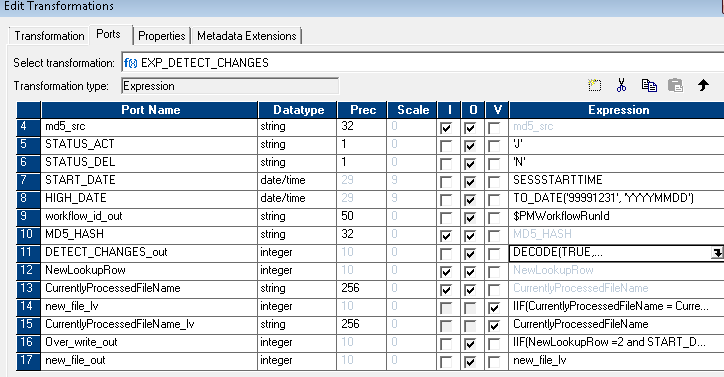

In expression transformation we compare the lookupdata and source data. In here we generate two columns md5_src and md5_lkp for comparison purpose

The decode condition will be DECODE(TRUE,

md5_lkp = md5_src, 0,

ISNULL(EMP_ID_lkp),1,

md5_lkp <> md5_src, 2)

0 means no change in data,1 means its a new record, 2 means record needs to be updated

To identify if there are more than record for the same PK value we create Over_write_out port with the following condition:

IIF(NewLookupRow =2 and START_DATE_LLKP = SESSSTARTTIME , 1, 0)

After expression transformation we have TC transformation this is used for performance purpose and to check if there are more than one file present at source. For this at exp we have following logic under port new_file_Iv IIF(CurrentlyProcessedFileName = CurrentlyProcessedFileName_lv, 0,1)

So at TC transformation we give the following condition:

IIF(new_file = 1 OR NEXTVAL = 100, TC_COMMIT_BEFORE, TC_CONTINUE_TRANSACTION)

Next at router we split into three groups with following condition

New (DETECT_CHANGES = 1 OR DETECT_CHANGES = 2) and Over_write_out = 0

Update DETECT_CHANGES = 2 and (Over_write_out = 0 or Over_write_out = 1)

Overwrite DETECT_CHANGES = 2 and Over_write_out = 1

'for updating records we have an update strategy based on PK and MD5_HASH value the following columns i.e. END_DATE and STATUS are updated.

Hope this article helps you in understanding SCD type II implementation using informatica

Comments

Post a Comment